AI Blocks

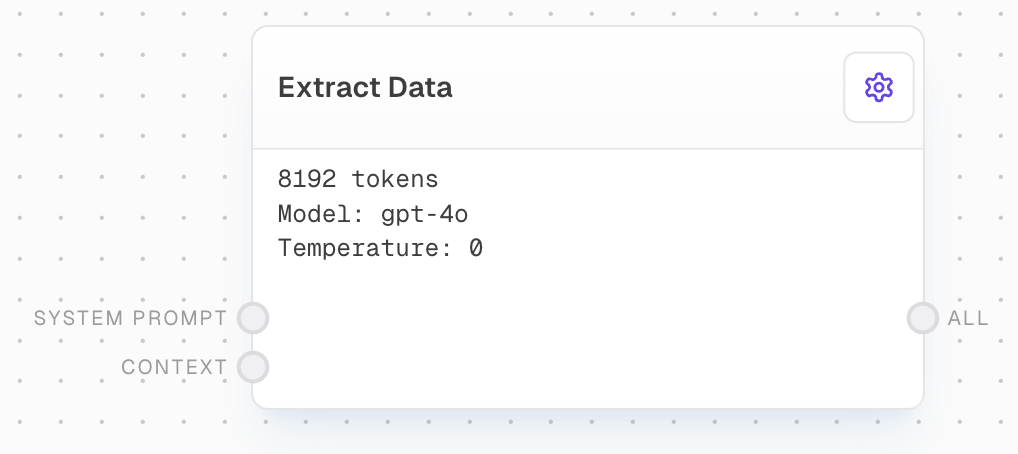

Extract Data Block

Leverage AI to extract structured data from unstructured text based on a flexible schema

Overview

The Extract Data Block uses AI capabilities to extract structured data from unstructured text input. By defining a flexible schema, you can specify which fields to extract, their data types, and provide detailed descriptions to guide the AI model in accurately extracting the desired information.

Inputs

The system prompt to send to the model. Optional. Used to provide high-level guidance to the AI model.

The context to extract the specified schema(s) from. Required.

Outputs

An array of objects containing all extracted data, structured according to the defined schema.

For each field defined in the schema, an array containing the extracted values for that field. The type will match the schema field type.

The time in milliseconds that the block took to execute.

Editor Settings

The AI model to use for data extraction. Available models are dynamically populated based on the LLM provider configuration.

The schema defining what data to extract. Specify field names, types, and descriptions to guide the extraction.

Parameters

What sampling temperature to use. Higher values like 0.8 make output more random, while lower values like 0.2 make it more focused and deterministic.

Alternative to temperature sampling. Only tokens comprising the top P probability mass are considered. For example, 0.1 means only tokens in the top 10% probability are considered.

Whether to use top p sampling instead of temperature sampling.

The maximum number of tokens to generate in the completion.

A sequence where the API will stop generating further tokens.

Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far, increasing the model’s likelihood to talk about new topics.

Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model’s likelihood to repeat the same line verbatim.

If specified, OpenAI will make a best effort to sample deterministically, such that repeated requests with the same seed and parameters should return the same result.

Advanced

If enabled, requests with the same parameters and messages will be cached for immediate responses without an API call.

If enabled, streaming responses from this node will be shown in Subgraph nodes that call this graph.

If enabled, the node will error if any of the results returned do not conform to the schema.

Error Handling

The block will retry failed attempts up to 3 times with exponential backoff:- Minimum retry delay: 500ms

- Maximum retry delay: 5000ms

- Retry factor: 2.5x

- Includes randomization

- Maximum retry time: 5 minutes

- Invalid schema validation

- Missing LLM provider configuration

- API errors and timeouts

- Aborted requests

Always validate the output of the Extract Data block, especially when working with critical data or making important decisions based on the extracted information.

Example: Extracting Contact Information

- Add an Extract Data block to your flow.

- Connect your input text (e.g., an email or document) to the

Contextinput. - Define a schema in the block settings, for example:

- Select your desired provider and model.

- Run your flow. The block will output an object with the extracted information.